Build a Document AI Pipeline for Any Type of PDF with Gemini

Tables, Images, figures or equations are not problem anymore! Full Code provided.

Automated document processing is one of the biggest winners of the ChatGPT revolution, as LLMs are able to tackle a wide range of subjects and tasks in a zero-shot setting, meaning without in-domain labeled training data. This has made building AI-powered applications to process, parse, and automatically understand arbitrary documents much easier. Though naive approaches using LLMs are still hindered by non-text context, such as figures, images, and tables, this is what we will try to address in this blog post, with a special focus on PDFs.

At a basic level, PDFs are just a collection of characters, images, and lines along with their exact coordinates. They have no inherent “text” structure and were not built to be processed as text but only to be viewed as is. This is what makes working with them difficult, as text-only approaches fail to capture all the layout and visual elements in these types of documents, resulting in a significant loss of context and information.

One way to bypass this “text-only” limitation is to do heavy pre-processing of the document by detecting tables, images, and layout before feeding them to the LLM. Tables can be parsed to Markdown or JSON, images and figures can be represented by their captions, and the text can be fed as is. However, this approach requires custom models and will still result in some loss of information, so can we do better?

Multimodal LLMs

Most recent large models are now multi-modal, meaning they can process multiple modalities like text, code, and images. This opens the way to a simpler solution to our problem where one model does everything at once. So, instead of captioning images and parsing tables, we can just feed the page as an image and process it as is. Our pipeline will be able to load the PDF, extract each page as an image, split it into chunks (using the LLM), and index each chunk. If a chunk is retrieved, then the full page is included in the LLM context to perform the task. In what follows, we will detail how this can be implemented in practice.

The Pipeline

The pipeline we are implementing is a two-step process. First, we segment each page into significant chunks and summarize each of them. Second, we index chunks once then search the chunks each time we get a request and include the full context with each retrieved chunk in the LLM context.

Step 1: Page Segmentation and Summarization

We extract the pages as images and pass each of them to the multi-modal LLM to segment them. Models like Gemini can understand and process page layout easily:

- Tables are identified as one chunk.

- Figures form another chunk.

- Text blocks are segmented into individual chunks.

- …

For each element, the LLM generates a summary than can be embedded and indexed into a vector database.

Step 2: Embedding and Contextual Retrieval

In this tutorial we will use text embedding only for simplicity but one improvement would be to use vision embeddings directly.

Each entry in the database includes:

- The summary of the chunk.

- The page number where it was found.

- A link to the image representation of the full page for added context.

This schema allows for local level searches (at the chunk level) while keeping track of the context (by linking back to the full page). For example, if a search query retrieves an item, the Agent can include the entire page image to provide full layout and extra context to the LLM in order to maximize response quality.

By providing the full image, all the visual cues and important layout information (like images, titles, bullet points… ) and neighboring items (tables, paragraph, …) are available to the LLM at the time of generating a response.

Agents

We will implement each step as a separate, re-usable agent:

The first agent is for parsing, chunking, and summarization. This involves the segmentation of the document into significant chunks, followed by the generation of summaries for each of them. This agent only needs to be run once per PDF to preprocess the document.

The second agent manages indexing, search, and retrieval. This includes inserting the embedding of chunks into the vector database for efficient search. Indexing is performed once per document, while searches can be repeated as many times as needed for different queries.

For both agents, we use Gemini, a multimodal LLM with strong vision understanding abilities.

Parsing and Chunking Agent

The first agent is in charge of segmenting each page into meaningful chunks and summarizing each of them, following these steps:

Step 1: Extracting PDF Pages as Images

We use the pdf2image library. The images are then encoded in Base64 format to simplify adding them to the LLM request.

Here’s the implementation:

from document_ai_agents.document_utils import extract_images_from_pdf

from document_ai_agents.image_utils import pil_image_to_base64_jpeg

from pathlib import Path

class DocumentParsingAgent:

@classmethod

def get_images(cls, state):

"""

Extract pages of a PDF as Base64-encoded JPEG images.

"""

assert Path(state.document_path).is_file(), "File does not exist"

# Extract images from PDF

images = extract_images_from_pdf(state.document_path)

assert images, "No images extracted"

# Convert images to Base64-encoded JPEG

pages_as_base64_jpeg_images = [pil_image_to_base64_jpeg(x) for x in images]

return {"pages_as_base64_jpeg_images": pages_as_base64_jpeg_images}

extract_images_from_pdf: Extracts each page of the PDF as a PIL image.

pil_image_to_base64_jpeg: Converts the image into a Base64-encoded JPEG format.

Step 2: Chunking and Summarization

Each image is then sent to the LLM for segmentation and summarization. We use structured outputs to ensure we get the predictions in the format we expect:

from pydantic import BaseModel, Field

from typing import Literal

import json

import google.generativeai as genai

from langchain_core.documents import Document

class DetectedLayoutItem(BaseModel):

"""

Schema for each detected layout element on a page.

"""

element_type: Literal["Table", "Figure", "Image", "Text-block"] = Field(

...,

description="Type of detected item. Examples: Table, Figure, Image, Text-block."

)

summary: str = Field(..., description="A detailed description of the layout item.")

class LayoutElements(BaseModel):

"""

Schema for the list of layout elements on a page.

"""

layout_items: list[DetectedLayoutItem] = []

class FindLayoutItemsInput(BaseModel):

"""

Input schema for processing a single page.

"""

document_path: str

base64_jpeg: str

page_number: int

class DocumentParsingAgent:

def __init__(self, model_name="gemini-1.5-flash-002"):

"""

Initialize the LLM with the appropriate schema.

"""

layout_elements_schema = prepare_schema_for_gemini(LayoutElements)

self.model_name = model_name

self.model = genai.GenerativeModel(

self.model_name,

generation_config={

"response_mime_type": "application/json",

"response_schema": layout_elements_schema,

},

)

def find_layout_items(self, state: FindLayoutItemsInput):

"""

Send a page image to the LLM for segmentation and summarization.

"""

messages = [

f"Find and summarize all the relevant layout elements in this PDF page in the following format: "

f"{LayoutElements.schema_json()}. "

f"Tables should have at least two columns and at least two rows. "

f"The coordinates should overlap with each layout item.",

{"mime_type": "image/jpeg", "data": state.base64_jpeg},

]

# Send the prompt to the LLM

result = self.model.generate_content(messages)

data = json.loads(result.text)

# Convert the JSON output into documents

documents = [

Document(

page_content=item["summary"],

metadata={

"page_number": state.page_number,

"element_type": item["element_type"],

"document_path": state.document_path,

},

)

for item in data["layout_items"]

]

return {"documents": documents}

The LayoutElements schema defines the structure of the output, with each layout item type (Table, Figure, … ) and its summary.

Step 3: Parallel Processing of Pages

Pages are processed in parallel for speed. The following method creates a list of tasks to handle all the page image at once since the processing is io-bound:

from langgraph.types import Send

class DocumentParsingAgent:

@classmethod

def continue_to_find_layout_items(cls, state):

"""

Generate tasks to process each page in parallel.

"""

return [

Send(

"find_layout_items",

FindLayoutItemsInput(

base64_jpeg=base64_jpeg,

page_number=i,

document_path=state.document_path,

),

)

for i, base64_jpeg in enumerate(state.pages_as_base64_jpeg_images)

]

Each page is sent to the find_layout_items function as an independent task.

Full workflow

The agent’s workflow is built using a StateGraph, linking the image extraction and layout detection steps into a unified pipeline ->

from langgraph.graph import StateGraph, START, END

class DocumentParsingAgent:

def build_agent(self):

"""

Build the agent workflow using a state graph.

"""

builder = StateGraph(DocumentLayoutParsingState)

# Add nodes for image extraction and layout item detection

builder.add_node("get_images", self.get_images)

builder.add_node("find_layout_items", self.find_layout_items)

# Define the flow of the graph

builder.add_edge(START, "get_images")

builder.add_conditional_edges("get_images", self.continue_to_find_layout_items)

builder.add_edge("find_layout_items", END)

self.graph = builder.compile()

To run the agent on a sample PDF we do:

if __name__ == "__main__":

_state = DocumentLayoutParsingState(

document_path="path/to/document.pdf"

)

agent = DocumentParsingAgent()

# Step 1: Extract images from PDF

result_images = agent.get_images(_state)

_state.pages_as_base64_jpeg_images = result_images["pages_as_base64_jpeg_images"]

# Step 2: Process the first page (as an example)

result_layout = agent.find_layout_items(

FindLayoutItemsInput(

base64_jpeg=_state.pages_as_base64_jpeg_images[0],

page_number=0,

document_path=_state.document_path,

)

)

# Display the results

for item in result_layout["documents"]:

print(item.page_content)

print(item.metadata["element_type"])

This results in a parsed, segmented, and summarized representation of the PDF, which is the input of the second agent we will build next.

RAG Agent

This second agent handles the indexing and retrieval part. It saves the documents of the previous agent into a vector database and uses the result for retrieval. This can be split into two seprate steps, indexing and retrieval.

Step 1: Indexing the Split Document

Using the summaries generated, we vectorize them and save them in a ChromaDB database:

class DocumentRAGAgent:

def index_documents(self, state: DocumentRAGState):

"""

Index the parsed documents into the vector store.

"""

assert state.documents, "Documents should have at least one element"

# Check if the document is already indexed

if self.vector_store.get(where={"document_path": state.document_path})["ids"]:

logger.info(

"Documents for this file are already indexed, exiting this node"

)

return # Skip indexing if already done

# Add parsed documents to the vector store

self.vector_store.add_documents(state.documents)

logger.info(f"Indexed {len(state.documents)} documents for {state.document_path}")

The index_documents method embeds the chunk summaries into the vector store. We keep metadata such as the document path and page number for later use.

Step 2: Handling Questions

When a user asks a question, the agent searches for the most relevant chunks in the vector store. It retrieves the summaries and corresponding page images for contextual understanding.

class DocumentRAGAgent:

def answer_question(self, state: DocumentRAGState):

"""

Retrieve relevant chunks and generate a response to the user's question.

"""

# Retrieve the top-k relevant documents based on the query

relevant_documents: list[Document] = self.retriever.invoke(state.question)

# Retrieve corresponding page images (avoid duplicates)

images = list(

set(

[

state.pages_as_base64_jpeg_images[doc.metadata["page_number"]]

for doc in relevant_documents

]

)

)

logger.info(f"Responding to question: {state.question}")

# Construct the prompt: Combine images, relevant summaries, and the question

messages = (

[{"mime_type": "image/jpeg", "data": base64_jpeg} for base64_jpeg in images]

+ [doc.page_content for doc in relevant_documents]

+ [

f"Answer this question using the context images and text elements only: {state.question}",

]

)

# Generate the response using the LLM

response = self.model.generate_content(messages)

return {"response": response.text, "relevant_documents": relevant_documents}

The retriever queries the vector store to find the chunks most relevant to the user’s question. We then build the context for the LLM (Gemini), which combines text chunks and images in order to generate a response.

The full agent Workflow

The agent workflow has two stages, an indexing stage and a question answering stage:

class DocumentRAGAgent:

def build_agent(self):

"""

Build the RAG agent workflow.

"""

builder = StateGraph(DocumentRAGState)

# Add nodes for indexing and answering questions

builder.add_node("index_documents", self.index_documents)

builder.add_node("answer_question", self.answer_question)

# Define the workflow

builder.add_edge(START, "index_documents")

builder.add_edge("index_documents", "answer_question")

builder.add_edge("answer_question", END)

self.graph = builder.compile()

Example run

if __name__ == "__main__":

from pathlib import Path

# Import the first agent to parse the document

from document_ai_agents.document_parsing_agent import (

DocumentLayoutParsingState,

DocumentParsingAgent,

)

# Step 1: Parse the document using the first agent

state1 = DocumentLayoutParsingState(

document_path=str(Path(__file__).parents[1] / "data" / "docs.pdf")

)

agent1 = DocumentParsingAgent()

result1 = agent1.graph.invoke(state1)

# Step 2: Set up the second agent for retrieval and answering

state2 = DocumentRAGState(

question="Who was acknowledged in this paper?",

document_path=str(Path(__file__).parents[1] / "data" / "docs.pdf"),

pages_as_base64_jpeg_images=result1["pages_as_base64_jpeg_images"],

documents=result1["documents"],

)

agent2 = DocumentRAGAgent()

# Index the documents

agent2.graph.invoke(state2)

# Answer the first question

result2 = agent2.graph.invoke(state2)

print(result2["response"])

# Answer a second question

state3 = DocumentRAGState(

question="What is the macro average when fine-tuning on PubLayNet using M-RCNN?",

document_path=str(Path(__file__).parents[1] / "data" / "docs.pdf"),

pages_as_base64_jpeg_images=result1["pages_as_base64_jpeg_images"],

documents=result1["documents"],

)

result3 = agent2.graph.invoke(state3)

print(result3["response"])

With this implementation, the pipeline is complete for document processing, retrieval, and question answering.

Example: Using the Document AI Pipeline

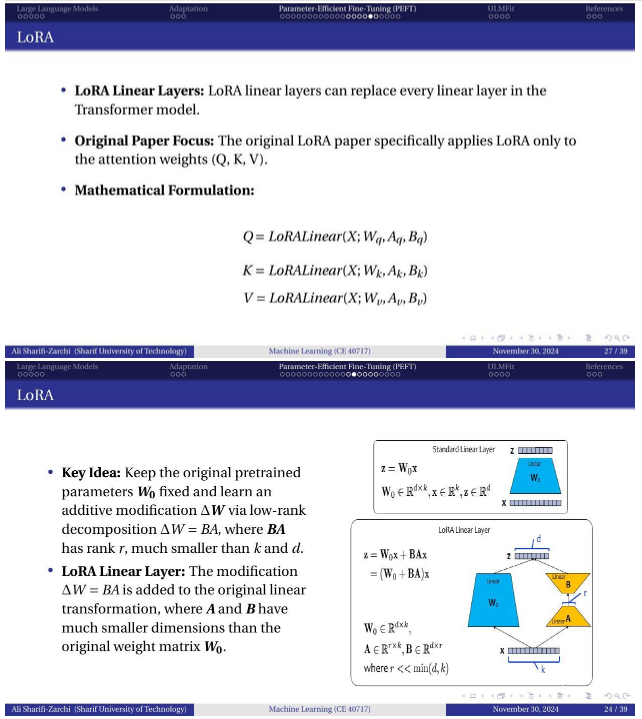

Let’s walk through a practical example using the document LLM & Adaptation.pdf , a set of 39 slides containing text, equations, and figures (CC BY 4.0).

Step 1: Parsing and summarizing the Document (Agent 1)

- Execution Time: Parsing the 39-page document took 29 seconds.

- Result: Agent 1 produces an indexed document consisting of chunk summaries and base64-encoded JPEG images of each page.

Step 2: Questioning the Document (Agent 2)

We ask the following question:

“Explain LoRA, give the relevant equations”

Result:

Retrieved pages:

Response from the LLM

The LLM was able to include equations and figures into its response by taking advantage of the visual context in generating a coherent and correct response based on the document.

Conclusion

In this quick tutorial, we saw how you can take your document AI processing pipeline a step further by leveraging the multi-modality of recent LLMs and using the full visual context available in each document, hopefully improving the quality of outputs that you are able to get from either your information extraction or RAG pipeline.

We built a stronger document segmentation step that is able to detect the important items like paragraphs, tables, and figures and summarize them, then used the result of this first step to query the collection of items and pages to give relevant and precise answers using Gemini. As a next step, you can try it on your use case and document, try to use a scalable vector database, and deploy these agents as part of your AI app.

Full code and example are available here : https://github.com/CVxTz/document_ai_agents

Thank you for reading ! 😃

Build a Document AI pipeline for ANY type of PDF With Gemini was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Build a Document AI Pipeline for Any Type of PDF with GeminiTables, Images, figures or equations are not problem anymore! Full Code provided.Photo by Matt Noble on UnsplashAutomated document processing is one of the biggest winners of the ChatGPT revolution, as LLMs are able to tackle a wide range of subjects and tasks in a zero-shot setting, meaning without in-domain labeled training data. This has made building AI-powered applications to process, parse, and automatically understand arbitrary documents much easier. Though naive approaches using LLMs are still hindered by non-text context, such as figures, images, and tables, this is what we will try to address in this blog post, with a special focus on PDFs.At a basic level, PDFs are just a collection of characters, images, and lines along with their exact coordinates. They have no inherent “text” structure and were not built to be processed as text but only to be viewed as is. This is what makes working with them difficult, as text-only approaches fail to capture all the layout and visual elements in these types of documents, resulting in a significant loss of context and information.One way to bypass this “text-only” limitation is to do heavy pre-processing of the document by detecting tables, images, and layout before feeding them to the LLM. Tables can be parsed to Markdown or JSON, images and figures can be represented by their captions, and the text can be fed as is. However, this approach requires custom models and will still result in some loss of information, so can we do better?Multimodal LLMsMost recent large models are now multi-modal, meaning they can process multiple modalities like text, code, and images. This opens the way to a simpler solution to our problem where one model does everything at once. So, instead of captioning images and parsing tables, we can just feed the page as an image and process it as is. Our pipeline will be able to load the PDF, extract each page as an image, split it into chunks (using the LLM), and index each chunk. If a chunk is retrieved, then the full page is included in the LLM context to perform the task. In what follows, we will detail how this can be implemented in practice.The PipelineThe pipeline we are implementing is a two-step process. First, we segment each page into significant chunks and summarize each of them. Second, we index chunks once then search the chunks each time we get a request and include the full context with each retrieved chunk in the LLM context.Step 1: Page Segmentation and SummarizationWe extract the pages as images and pass each of them to the multi-modal LLM to segment them. Models like Gemini can understand and process page layout easily:Tables are identified as one chunk.Figures form another chunk.Text blocks are segmented into individual chunks.…For each element, the LLM generates a summary than can be embedded and indexed into a vector database.Step 2: Embedding and Contextual RetrievalIn this tutorial we will use text embedding only for simplicity but one improvement would be to use vision embeddings directly.Each entry in the database includes:The summary of the chunk.The page number where it was found.A link to the image representation of the full page for added context.This schema allows for local level searches (at the chunk level) while keeping track of the context (by linking back to the full page). For example, if a search query retrieves an item, the Agent can include the entire page image to provide full layout and extra context to the LLM in order to maximize response quality.By providing the full image, all the visual cues and important layout information (like images, titles, bullet points… ) and neighboring items (tables, paragraph, …) are available to the LLM at the time of generating a response.AgentsWe will implement each step as a separate, re-usable agent:The first agent is for parsing, chunking, and summarization. This involves the segmentation of the document into significant chunks, followed by the generation of summaries for each of them. This agent only needs to be run once per PDF to preprocess the document.The second agent manages indexing, search, and retrieval. This includes inserting the embedding of chunks into the vector database for efficient search. Indexing is performed once per document, while searches can be repeated as many times as needed for different queries.For both agents, we use Gemini, a multimodal LLM with strong vision understanding abilities.Parsing and Chunking AgentThe first agent is in charge of segmenting each page into meaningful chunks and summarizing each of them, following these steps:Step 1: Extracting PDF Pages as ImagesWe use the pdf2image library. The images are then encoded in Base64 format to simplify adding them to the LLM request.Here’s the implementation:from document_ai_agents.document_utils import extract_images_from_pdffrom document_ai_agents.image_utils import pil_image_to_base64_jpegfrom pathlib import Pathclass DocumentParsingAgent: @classmethod def get_images(cls, state): “”” Extract pages of a PDF as Base64-encoded JPEG images. “”” assert Path(state.document_path).is_file(), “File does not exist” # Extract images from PDF images = extract_images_from_pdf(state.document_path) assert images, “No images extracted” # Convert images to Base64-encoded JPEG pages_as_base64_jpeg_images = [pil_image_to_base64_jpeg(x) for x in images] return {“pages_as_base64_jpeg_images”: pages_as_base64_jpeg_images}extract_images_from_pdf: Extracts each page of the PDF as a PIL image.pil_image_to_base64_jpeg: Converts the image into a Base64-encoded JPEG format.Step 2: Chunking and SummarizationEach image is then sent to the LLM for segmentation and summarization. We use structured outputs to ensure we get the predictions in the format we expect:from pydantic import BaseModel, Fieldfrom typing import Literalimport jsonimport google.generativeai as genaifrom langchain_core.documents import Documentclass DetectedLayoutItem(BaseModel): “”” Schema for each detected layout element on a page. “”” element_type: Literal[“Table”, “Figure”, “Image”, “Text-block”] = Field( …, description=”Type of detected item. Examples: Table, Figure, Image, Text-block.” ) summary: str = Field(…, description=”A detailed description of the layout item.”)class LayoutElements(BaseModel): “”” Schema for the list of layout elements on a page. “”” layout_items: list[DetectedLayoutItem] = []class FindLayoutItemsInput(BaseModel): “”” Input schema for processing a single page. “”” document_path: str base64_jpeg: str page_number: intclass DocumentParsingAgent: def __init__(self, model_name=”gemini-1.5-flash-002″): “”” Initialize the LLM with the appropriate schema. “”” layout_elements_schema = prepare_schema_for_gemini(LayoutElements) self.model_name = model_name self.model = genai.GenerativeModel( self.model_name, generation_config={ “response_mime_type”: “application/json”, “response_schema”: layout_elements_schema, }, ) def find_layout_items(self, state: FindLayoutItemsInput): “”” Send a page image to the LLM for segmentation and summarization. “”” messages = [ f”Find and summarize all the relevant layout elements in this PDF page in the following format: ” f”{LayoutElements.schema_json()}. ” f”Tables should have at least two columns and at least two rows. ” f”The coordinates should overlap with each layout item.”, {“mime_type”: “image/jpeg”, “data”: state.base64_jpeg}, ] # Send the prompt to the LLM result = self.model.generate_content(messages) data = json.loads(result.text) # Convert the JSON output into documents documents = [ Document( page_content=item[“summary”], metadata={ “page_number”: state.page_number, “element_type”: item[“element_type”], “document_path”: state.document_path, }, ) for item in data[“layout_items”] ] return {“documents”: documents}The LayoutElements schema defines the structure of the output, with each layout item type (Table, Figure, … ) and its summary.Step 3: Parallel Processing of PagesPages are processed in parallel for speed. The following method creates a list of tasks to handle all the page image at once since the processing is io-bound:from langgraph.types import Sendclass DocumentParsingAgent: @classmethod def continue_to_find_layout_items(cls, state): “”” Generate tasks to process each page in parallel. “”” return [ Send( “find_layout_items”, FindLayoutItemsInput( base64_jpeg=base64_jpeg, page_number=i, document_path=state.document_path, ), ) for i, base64_jpeg in enumerate(state.pages_as_base64_jpeg_images) ]Each page is sent to the find_layout_items function as an independent task.Full workflowThe agent’s workflow is built using a StateGraph, linking the image extraction and layout detection steps into a unified pipeline ->from langgraph.graph import StateGraph, START, ENDclass DocumentParsingAgent: def build_agent(self): “”” Build the agent workflow using a state graph. “”” builder = StateGraph(DocumentLayoutParsingState) # Add nodes for image extraction and layout item detection builder.add_node(“get_images”, self.get_images) builder.add_node(“find_layout_items”, self.find_layout_items) # Define the flow of the graph builder.add_edge(START, “get_images”) builder.add_conditional_edges(“get_images”, self.continue_to_find_layout_items) builder.add_edge(“find_layout_items”, END) self.graph = builder.compile()To run the agent on a sample PDF we do:if __name__ == “__main__”: _state = DocumentLayoutParsingState( document_path=”path/to/document.pdf” ) agent = DocumentParsingAgent() # Step 1: Extract images from PDF result_images = agent.get_images(_state) _state.pages_as_base64_jpeg_images = result_images[“pages_as_base64_jpeg_images”] # Step 2: Process the first page (as an example) result_layout = agent.find_layout_items( FindLayoutItemsInput( base64_jpeg=_state.pages_as_base64_jpeg_images[0], page_number=0, document_path=_state.document_path, ) ) # Display the results for item in result_layout[“documents”]: print(item.page_content) print(item.metadata[“element_type”])This results in a parsed, segmented, and summarized representation of the PDF, which is the input of the second agent we will build next.RAG AgentThis second agent handles the indexing and retrieval part. It saves the documents of the previous agent into a vector database and uses the result for retrieval. This can be split into two seprate steps, indexing and retrieval.Step 1: Indexing the Split DocumentUsing the summaries generated, we vectorize them and save them in a ChromaDB database:class DocumentRAGAgent: def index_documents(self, state: DocumentRAGState): “”” Index the parsed documents into the vector store. “”” assert state.documents, “Documents should have at least one element” # Check if the document is already indexed if self.vector_store.get(where={“document_path”: state.document_path})[“ids”]: logger.info( “Documents for this file are already indexed, exiting this node” ) return # Skip indexing if already done # Add parsed documents to the vector store self.vector_store.add_documents(state.documents) logger.info(f”Indexed {len(state.documents)} documents for {state.document_path}”)The index_documents method embeds the chunk summaries into the vector store. We keep metadata such as the document path and page number for later use.Step 2: Handling QuestionsWhen a user asks a question, the agent searches for the most relevant chunks in the vector store. It retrieves the summaries and corresponding page images for contextual understanding.class DocumentRAGAgent: def answer_question(self, state: DocumentRAGState): “”” Retrieve relevant chunks and generate a response to the user’s question. “”” # Retrieve the top-k relevant documents based on the query relevant_documents: list[Document] = self.retriever.invoke(state.question) # Retrieve corresponding page images (avoid duplicates) images = list( set( [ state.pages_as_base64_jpeg_images[doc.metadata[“page_number”]] for doc in relevant_documents ] ) ) logger.info(f”Responding to question: {state.question}”) # Construct the prompt: Combine images, relevant summaries, and the question messages = ( [{“mime_type”: “image/jpeg”, “data”: base64_jpeg} for base64_jpeg in images] + [doc.page_content for doc in relevant_documents] + [ f”Answer this question using the context images and text elements only: {state.question}”, ] ) # Generate the response using the LLM response = self.model.generate_content(messages) return {“response”: response.text, “relevant_documents”: relevant_documents}The retriever queries the vector store to find the chunks most relevant to the user’s question. We then build the context for the LLM (Gemini), which combines text chunks and images in order to generate a response.The full agent WorkflowThe agent workflow has two stages, an indexing stage and a question answering stage:class DocumentRAGAgent: def build_agent(self): “”” Build the RAG agent workflow. “”” builder = StateGraph(DocumentRAGState) # Add nodes for indexing and answering questions builder.add_node(“index_documents”, self.index_documents) builder.add_node(“answer_question”, self.answer_question) # Define the workflow builder.add_edge(START, “index_documents”) builder.add_edge(“index_documents”, “answer_question”) builder.add_edge(“answer_question”, END) self.graph = builder.compile()Example runif __name__ == “__main__”: from pathlib import Path # Import the first agent to parse the document from document_ai_agents.document_parsing_agent import ( DocumentLayoutParsingState, DocumentParsingAgent, ) # Step 1: Parse the document using the first agent state1 = DocumentLayoutParsingState( document_path=str(Path(__file__).parents[1] / “data” / “docs.pdf”) ) agent1 = DocumentParsingAgent() result1 = agent1.graph.invoke(state1) # Step 2: Set up the second agent for retrieval and answering state2 = DocumentRAGState( question=”Who was acknowledged in this paper?”, document_path=str(Path(__file__).parents[1] / “data” / “docs.pdf”), pages_as_base64_jpeg_images=result1[“pages_as_base64_jpeg_images”], documents=result1[“documents”], ) agent2 = DocumentRAGAgent() # Index the documents agent2.graph.invoke(state2) # Answer the first question result2 = agent2.graph.invoke(state2) print(result2[“response”]) # Answer a second question state3 = DocumentRAGState( question=”What is the macro average when fine-tuning on PubLayNet using M-RCNN?”, document_path=str(Path(__file__).parents[1] / “data” / “docs.pdf”), pages_as_base64_jpeg_images=result1[“pages_as_base64_jpeg_images”], documents=result1[“documents”], ) result3 = agent2.graph.invoke(state3) print(result3[“response”])With this implementation, the pipeline is complete for document processing, retrieval, and question answering.Example: Using the Document AI PipelineLet’s walk through a practical example using the document LLM & Adaptation.pdf , a set of 39 slides containing text, equations, and figures (CC BY 4.0).Step 1: Parsing and summarizing the Document (Agent 1)Execution Time: Parsing the 39-page document took 29 seconds.Result: Agent 1 produces an indexed document consisting of chunk summaries and base64-encoded JPEG images of each page.Step 2: Questioning the Document (Agent 2)We ask the following question: “Explain LoRA, give the relevant equations”Result:Retrieved pages:Source: LLM & Adaptation.pdf License CC-BYResponse from the LLMImage by author.The LLM was able to include equations and figures into its response by taking advantage of the visual context in generating a coherent and correct response based on the document.ConclusionIn this quick tutorial, we saw how you can take your document AI processing pipeline a step further by leveraging the multi-modality of recent LLMs and using the full visual context available in each document, hopefully improving the quality of outputs that you are able to get from either your information extraction or RAG pipeline.We built a stronger document segmentation step that is able to detect the important items like paragraphs, tables, and figures and summarize them, then used the result of this first step to query the collection of items and pages to give relevant and precise answers using Gemini. As a next step, you can try it on your use case and document, try to use a scalable vector database, and deploy these agents as part of your AI app.Full code and example are available here : https://github.com/CVxTz/document_ai_agentsThank you for reading ! 😃Build a Document AI pipeline for ANY type of PDF With Gemini was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story. nlp, document-ai, agents, hands-on-tutorials, llm Towards Data Science – MediumRead More

Add to favorites

Add to favorites

0 Comments